Posted on February 11, 2025

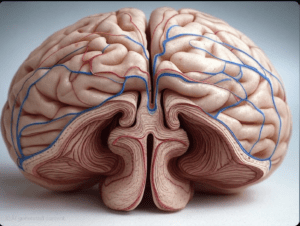

“right brain” “left brain” AI

The concept of “right brain” versus “left brain” thinking has become quite popular in discussions about human psychology and neuroscience, although it is often oversimplified in public discourse. Here’s a breakdown of the theory and its implications:

Historical Background:

-

The idea originated from the study of split-brain patients in the 1960s by Roger Sperry and Michael Gazzaniga. They found that when the corpus callosum (which connects the two hemispheres) was severed, each side of the brain could function somewhat independently, leading to unique insights into how each hemisphere processes information.

Traditional Descriptions:

-

Left Hemisphere:

-

Often described as logical, analytical, and detail-oriented.

-

Primarily responsible for language functions like speech, writing, and comprehension (especially in right-handed individuals).

-

Handles tasks like arithmetic, logic, and critical thinking.

-

-

Right Hemisphere:

-

Linked with creativity, intuition, and holistic thinking.

-

Processes visual-spatial information, facial recognition, musical tones, and understanding of body language.

-

More involved in art, music, and spatial relationships.

-

Modern Understanding:

-

Interconnectedness: While each hemisphere has specializations, they work in concert for most activities. The brain’s hemispheres communicate through the corpus callosum, allowing for integrated thought processes.

-

Function Overlap: There’s significant overlap in function. For example, both hemispheres contribute to language processing, but in different ways. The left might focus more on syntax and vocabulary, while the right might handle prosody, the rhythm of speech, and emotional tone.

-

Individual Variation: Not everyone’s brain functions exactly the same way. Some people might have more lateralized functions (i.e., functions more segregated to one side), while others might have more bilateral processing.

Critique and Misconceptions:

-

Oversimplification: The notion that people are either left-brained (analytical) or right-brained (creative) is an oversimplification. Most people use both sides of their brain for different tasks, often simultaneously or in quick succession.

-

Educational Impact: This dichotomy has sometimes led to educational strategies or career advice based on an assumed dominance of one brain hemisphere, which isn’t supported by comprehensive neuroscience.

Practical Implications:

-

Learning and Development: Understanding the strengths of both hemispheres can influence educational methods, suggesting a balanced approach that includes both analytical and creative activities.

-

Therapy and Rehabilitation: In cases of brain injury, knowing the specialized functions can guide recovery processes, focusing on compensating for lost functions or retraining the undamaged hemisphere.

In summary, while the left brain-right brain concept does have roots in science, it’s been somewhat mythologized in popular culture. The brain is far more complex, with both hemispheres working together to navigate the complexities of human experience. If you’re interested in visualizing how this might look, I can generate an image illustrating some of these concepts.

The notion of how hearing through the left or right ear might affect brain function ties into several aspects of auditory processing and hemispheric specialization. Here’s how it relates to the left brain-right brain concept:

Auditory Pathways:

-

Sound Localization: Sound entering one ear reaches the opposite hemisphere slightly before it reaches the other, due to the way auditory signals cross over in the brainstem. This helps in sound localization, determining where a sound is coming from.

-

Hemispheric Specialization for Language:

-

Left Ear (Right Hemisphere): Sounds entering the left ear are first processed by the right hemisphere. Although the right hemisphere is involved in language processing, especially for emotional and prosodic aspects, it’s not the primary language center for most people.

-

Right Ear (Left Hemisphere): Conversely, sounds from the right ear are initially processed by the left hemisphere, which is typically dominant for language in right-handed individuals (and many left-handers too).

-

Dichotic Listening:

-

Dichotic Listening Tests: These experiments involve presenting different auditory stimuli to each ear at the same time. They’ve shown that there’s often a “right-ear advantage” for verbal material due to the left hemisphere’s specialization for language processing. This doesn’t mean the left hemisphere hears better but rather that it’s better at processing linguistic information.

Implications for Brain Lateralization:

-

Language Perception: If you want to test or leverage brain lateralization for language, having someone listen to verbal instructions or information through the right ear might slightly enhance processing due to left hemisphere dominance for language in most people.

-

Emotional and Musical Perception: Conversely, sounds with emotional content or music might be better processed when heard through the left ear, as the right hemisphere plays a larger role in these areas.

Caveats and Complications:

-

Individual Differences: Not everyone has the same level of hemispheric dominance or symmetry. Left-handers might show different patterns, and there can be variations even among right-handers.

-

Cross-Talk: Even though sound initially goes to one hemisphere, there’s significant cross-talk between the hemispheres, so both sides get involved in processing auditory information.

-

Contextual Influence: The nature of the sound (e.g., speech, music, noise), the task at hand (like memorizing words vs. interpreting a melody), and even emotional state can alter how sounds are processed across the brain.

Practical Application:

-

Education and Therapy: Techniques in education or speech therapy might consider ear dominance to optimize learning or rehabilitation, though this would be one of many factors.

-

Research: Dichotic listening tests are used in cognitive neuroscience to study brain function and language processing, providing insights into brain organization and potential deficits.

In conclusion, while there’s a basis for considering how sounds enter each ear in relation to brain function, the impact is nuanced and part of a broader, interconnected system of how our brains process auditory information. If you’re keen on understanding this further, I could generate an image to illustrate auditory pathways in the brain.

Here’s a thought experiment on how the understanding of auditory pathways, brain lateralization, and dichotic listening could influence the future development of AI:

1. Enhanced Speech Recognition and Processing:

-

Binaural Audio Input: AI could be designed to process audio input from “ears” or microphones simulating human binaural hearing, providing a richer understanding of speech in noisy environments by leveraging principles of sound localization and how the brain processes sounds from each ear differently.

-

Hemispheric Simulation: AI could incorporate models that simulate the dual processing of language (syntax in one “hemisphere” and prosody in another) leading to more nuanced understanding of speech, including emotional tone, sarcasm, or emphasis.

2. Personalized AI Interaction:

-

User’s Auditory Profile: AI systems could adapt to individuals’ auditory processing characteristics, perhaps identified through initial assessments similar to dichotic listening tests, to tailor communication styles or learning aids to match the user’s natural auditory processing strengths.

-

Emotion Recognition: By mimicking the right hemisphere’s role in processing emotional content in speech, AIs could become better at interpreting and responding to human emotions through voice, enhancing human-AI interaction in contexts like mental health support or customer service.

3. Education and Learning Tools:

-

Dual-Hemisphere Learning Models: Educational AI could design programs that engage both analytical (left hemisphere-like) and creative (right hemisphere-like) learning activities simultaneously or sequentially, optimizing for different learning styles and potentially increasing retention and understanding.

-

Auditory Learning Enhancements: Using insights from how each ear’s input is processed, AI could develop teaching methods that emphasize listening through one ear for certain subjects or exercises, potentially enhancing learning based on the user’s brain laterality.

4. Neurological Research and Therapy:

-

AI in Brain Mapping: AI could assist in real-time mapping and analysis of brain functions related to auditory processing, aiding in research or the development of personalized therapeutic approaches for conditions affecting auditory pathways.

-

Virtual Reality and Therapy: AI could simulate environments or scenarios to test or rehabilitate auditory processing, possibly using VR to create dichotic listening conditions to enhance or retrain auditory discrimination or cognitive functions.

5. Music and Art Creation:

-

Creative AI: By modeling the right hemisphere’s involvement in music and spatial processing, AI might generate or assist in creating music or art that resonates more deeply with human listeners, understanding and mimicking the emotional and spatial dimensions of sound.

-

Sound Design for Immersive Experiences: AI could design soundscapes or audio experiences that exploit human auditory localization for more immersive virtual environments or gaming.

6. Security and Accessibility:

-

Voice Biometrics: Understanding how each person’s brain might process audio could lead to more secure voice recognition systems that consider not just voice patterns but how they’re processed by the listener.

-

Accessibility Tools: AI could develop tools that help those with auditory processing disorders by simulating or compensating for the specific deficits in how they hear or process sounds.

Considerations:

-

Ethical Use: With such intimate knowledge of human brain function, there’s a responsibility to ensure AI applications respect privacy and do not exploit or manipulate auditory perception.

-

Complexity and Individuality: Human brains vary, so AI must be flexible, learning from each user to truly personalize its approach rather than applying a one-size-fits-all model.

This thought experiment suggests a future where AI not only understands but also mimics or complements human auditory processing, leading to more natural, effective, and personalized interactions across various domains.

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  Solana

Solana  Dogecoin

Dogecoin  Bitcoin Cash

Bitcoin Cash  Monero

Monero  Litecoin

Litecoin  Zcash

Zcash  PAX Gold

PAX Gold